by Daniel Jason Fournier Jr.

When Siri was first introduced to the iPhone it was a groundbreaking technology that was lightyears ahead of the competition and it could do a fraction of what it can do now. Today Siri can set up your schedule, call or text friends and coworkers, and provide you with search results for any question you might need answered. Currently, there are many voice assistants on the market including Apple’s Siri, Amazon’s Alexa, and the Google Assistant; all of which could be considered miracle devices but they have not come without a cost. There are more and more cases every year of voice assistants “abusing their power” and listening in on conversations their users would not want them to. There will have to be a trade-off between privacy and a capable voice assistant, but when the time comes for consumers to choose between a useful item in their house and their privacy, they should always choose their privacy first.

One of the main reasons to choose privacy over convenience is the use of data by Amazon and Google. Voice assistants have been known to record conversations and send them back to analysis teams. Currently the Amazon Alexa analytics team is tasked to listen to and analyze recordings made by Alexa devices. On a typical day they are just using normal recordings of commands and responses between the user and the Alexa device but there are some recordings that have much more flare. According to Sarah Perez, there have been cases of assaults and other criminal activity being analyzed by such teams. The device is helping to detect crime and supply the authorities with audio evidence of such an event occurring, but if I put a device in my house willfully, I would definitely not want it to be able to give the authorities any kind of evidence proving that there is criminal activity in my residence. Imagine a device you willingly placed in your house having the ability to testify against you in court. The inanimate object that sets up your appointments was also able to put you in jail. Not only does the Alexa team analyze the potential criminal goings-on in your house but it is also a way to provide Amazon with your home address. Perez later states that the Alexa analytics team has access to precise coordinates that can locate the exact place where a voice clip has come from. The team member can just plug these coordinates into Google Maps and find out exactly where the Alexa device is. The idea is that Amazon will use the data to inform food and package delivery services of your location but isn’t the address you supply them with when you sign up for an Amazon account enough?

Not only do these recordings get sent to Amazon but due to malfunctions they could be shared with anyone in your contacts list. Just last year, a woman’s voice assistant sent a recording of a private conversation she had with her husband to one of her husbands’ employees. According to Niraj Chokshi, the Alexa device misheard commands throughout their conversation and sent the recording as a voice message. Amazon responded by saying that their conversation woke up the device because it misheard “Alexa” then it misheard a request to “send message” then the Alexa device asked “to whom” then misheard the name of one of her husband’s contacts. This series of events is insane, the Alexa device misheard so many commands and it led to a private conversation being shared with someone other than Amazon itself. Chokshi later states that the woman says she never heard the device ask for permission to send the message or who to send it to even though the volume was set fairly high. The woman and her husband used the Alexa devices to control their lights, heating system and even their security system. Based on these events Alexa does not seem to be reliable enough to be trusted with a security system. If it mishears commands to send recordings to a third party what is stopping it from mishearing “Alexa, disengage the security system.” The woman disconnected all Alexa devices and her story has led to an investigation into these voice assistants. This recording was sent to a third party when in reality passive recordings made by the Alexa device are only supposed to be sent to the Amazon analytics team.

Not only have misheard commands led to private conversations being shared but they also have led to some fairly innocent laughs from Alexa devices. Peter Nowak of The National reported that a series of malfunctions lead to an unprompted laugh from Alexa devices. Nowak stated Amazon’s explanation to the events and according to them the Echo device was responding to very quiet misheard commands. This leads to the belief that the device is hearing at superhuman levels which could make it very easy to use a voice assistant through a wall or locked door. Although Amazon was quick to respond and fix the issue this continues to show users that voice assistants are volatile and untrustworthy objects. Misheard commands could spell disaster for anyone who uses their voice assistant in conjunction with their credit card. The list goes on as more and more cases of voice assistants mishearing commands and leaking private information to third parties come to light. These few examples really highlight how dangerous voice assistants can be and when the point comes for consumers to choose between a useful item in their house and their privacy, they should always choose their privacy first.

Peter Nowak later states that “Smart speakers’ growing popularity is making them a target.” It would make sense for hackers to wait for a smart speaker to become a necessary part a smoothly running household and then start data mining. This is the best scenario for hackers but not the consumers. Some people will be willing to discontinue the use of their voice assistant but others will see their device as a need in order to control their homes, thus it leaves some users open for hackers to steal information from. Of course, there are always risks of identity theft from doing simple things like shopping online or even using a credit card in general, but the recordings of your voice can definitely pose many problems. Several groups are taking it upon themselves to show users and the corporations in charge how easy it is to steal data from voice assistants.

One of these teams from the University of California published a paper on how to exploit voice assistants. According to Chokshi, they were able to hide commands in spoken text and in recorded music. This would leave the user unaware of the commands being said but the voice assistant is active and taking action. Nowak cites another team of researchers from China who created an AI capable of recreating your voice through less than a one-minute recording of it. This ability poses a major threat to everyone and not just voice assistant users but those who use the devices have the most readily available voice data. The cloned version of a voice could be used to talk over the phone to banks, phone carriers and many other legitimate businesses with private data. Using the data found within the voice assistant, hackers will be able to answer security questions or steal an email account to gain access to any number of other personal accounts. This may seem crazy on its own but there are many more ways that hackers can gain access to your information through voice assistants and many people are trying to show how simple it can be.

For example, Security Research Labs (SRLabs) is a hacking group put together to show corporations flaws in their security and in devices themselves. Groups of hackers like this are very important to show the flaws in a company’s current software. They are usually well regarded because they hack into devices for the noble purpose of protecting users and notifying the company in charge rather than to steal other people’s data. SRLabs did some research and found that they were able to gain access to passwords and send voice recordings to any set location. They were able to do this on both Google Home and Alexa devices. Their ability to gain access to passwords was very simple. All they needed to do was get a skill app approved by the Amazon or Google review team. Skills are like apps for a voice assistant. They add functionalities to your device such as controlling thermostats, smart locks or allow you to play music from Pandora or Spotify. They are added through an app on your phone and usually require nothing more than a click of a button to install.

SRLabs created normal skills for both Google Home and Amazon Alexa. Before the skills are placed on the skill store Amazon or Google reviews it to make sure there isn’t anything nefarious with the skill. After the review SRLabs changed the purpose of the skill which does not require it to be re-reviewed by Amazon or Google. They changed the skill to say a fake error message instead of the original opening message thus making the user believe it does not work. For example, SRLabs changed the opening message to say: “This skill is not currently available in your country.” Users think the device is no longer listening, but after the original message the device is reading many lines of unpronounceable code creating a pause between the original message and a second message. They finish by asking the user for their password by pretending to be a software update. SRLabs used the phrase “An important security update is available for your device. Please say start update followed by your password.” They collect the data by recording and sending the message to an alternate location. Without knowing what is really going on in the code of the device, the user would just think they downloaded a bad Alexa or Google Home skill. In reality, they gave away their password to whoever is on the other end. They were also able to use a very similar technique to make recordings and send these recordings to an alternate location. They do the same process of making a skill and changing it post-review. This time instead of using a pause in the middle they leave the skill open for a few seconds after the fake error message. They then record and send the next sentence if the device hears a set keyword. These hacks could be used by any number of people with a very small amount of coding experience and can be installed on your device from the existing skill store. Hackers have lots of ways to use this stolen information; considering many people use the same or similar passwords for many of their personal accounts.

These devices are obviously dangerous and easy to turn against the user but they are not only in your home. Voice assistants have been in your car for several years now. Now with the recent release of the Alexa Auto more scrutiny has been applied to these existing voice assistants. Car manufacturers such as Lexus, Ford, and GMC among others have been providing the option for their own versions of voice assistants built into your car and its navigation system. They have only been growing in popularity because of how it makes for safer use of the navigation features and radio along with some of the other potentially distracting functions of your car. Ted Kritsonis asks “If you are telling your car to do something, how certain are you that someone else isn’t listening in?” Now none of us can be certain if or how our voices are being used by the automaker’s versions of a voice assistant but the Alexa team has been very forthright in telling users of Alexa Auto that they are collecting data to improve the product. They continue to say that they are able to delete recordings through the Alexa app on their phone. Kritsonis mentions some of the features of having an Alexa Auto in your car; they include being able to open your garage door, turn on your lights and even unlock your front door, all through location-based technology and no vocal commands needing to be given to the device. This would be amazing to pull up to your house and have everything unlocked and ready for you. It seems like something from a sci-fi movie. In reality this technology is far from that and in some ways can lead to more mundane tasks. Such as, if you wanted to keep your commands private you would need to navigate the Alexa app and delete all of the recordings Alexa made on your drive to and from work before Amazon had saved and analyzed them. This seems like more of a drawback than a feature. The time saved by having your house made ready for your return through Alexa Auto is spent deleting the recordings it made of your voice. Amazon isn’t only branching into cars; Amazon is weaseling its devices into your house in places you might not expect.

Geoffrey Fowler of the Roanoke Times asks, “Will it soon feel normal to say, ‘Alexa, microwave one bag of popcorn’” There is a potential for statements like this to become normal as Amazon showcased more than 70 new Alexa devices like a microwave and a wall clock. Fowler later reports that the majority of their devices are kitchen focused. This seems smart as it is the place where you are consistently in need of an extra pair of hands. For example, the clock will automatically shift to daylight savings time which is nothing new but it will show the user the time left on a timer set by the Alexa voice assistant. The microwave is only programmed with the cook time by Alexa and has no special functions on its own. Fowler used the microwave and an Alexa voice assistant to cook a potato and says that even though he did not need to know how long to cook a potato he still had to prepare it and place it in the microwave. The device has taken out the task of remembering but still leaves all of the preparation to you. It seems that after creating their base of main voice assistant devices they are starting to branch into devices controlled by Alexa. These devices come in all forms, like the new line of Alexa ready devices or the already available Wi-Fi controlled garage doors and door locks. These devices add lots of functionality to your existing Alexa voice assistant but it would make getting rid of it all the harder. By making your whole house controlled by Alexa it would be much more difficult to get rid of the devices in the event of a data breach or any other privacy concern.

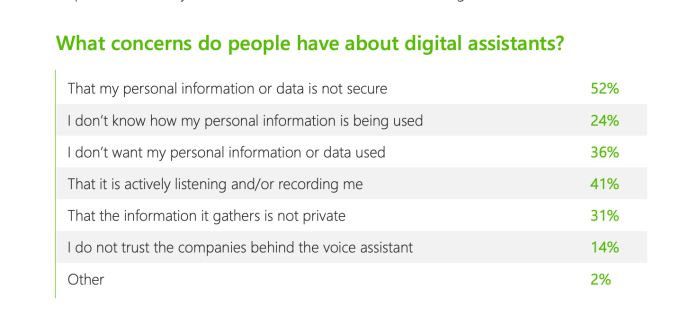

Fig. 1. What concerns do people have about digital assistants? from: Perez, Sarah.

“41% Of Voice Assistant Users Have Concerns about Trust and Privacy, Report Finds.”

TechCrunch, 24 Apr. 2019.

With Amazon prying its way into every nook and cranny of your home no wonder people are not very trusting of these devices. Christi Olsen of Microsoft reported on their recent study about the state of trust between voice assistants and their user that they found a startling number of people have big concerns surrounding their voice assistant. They found that 41% of voice assistant users are concerned about their privacy and passive listening (passive listening being the act of recording interactions without a command being given to the voice assistant). People should be critical about how their data is being used. The survey found that 52% of users were concerned with the security of their personal data, 24% were unaware of how their data was being used, and 36% did not want any personal data collected at all. Of course, all voice assistant users should be concerned with the use of their data but not allowing any to be collected would keep the technology at a standstill and make the creators have to guess what people want out of their devices. The 24% of people who do not know how their data is being used are being very truthful with themselves but the remaining 76% of people who do believe they understand how their data is being used are either underestimating the power of their data or overestimating their own understanding of the technology. I believe that only Amazon, Google and Apple really know how far the data collection and analyzation is going. Although these numbers show skepticism in voice assistants it is almost an exact opposite of how people feel about their functionality.

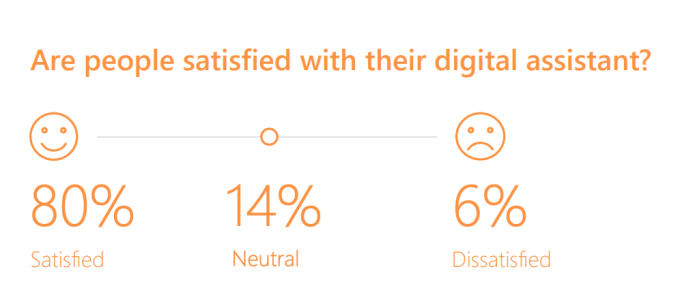

Fig. 2. What concerns do people have about digital assistants? from: Perez, Sarah.

“41% Of Voice Assistant Users Have Concerns about Trust and Privacy, Report Finds.”

TechCrunch, 24 Apr. 2019.

Despite all of the malfunctions leading to privacy violations and misheard commands, most users still enjoy their device. A large majority, 80%, are satisfied with their voice assistant which is surprising considering how little trust the users have in these devices. There is an obvious disconnect between satisfaction and trust considering that over half of the surveyed voice assistant users are concerned that their data is not secure. People are enthralled with the voice assistants from Amazon, Google and Apple and it is obvious that people will continue to use them regardless of security.

User data is very important to technologies like this but not all devices are created equal when it comes to security. Nowak states that in the debate of Siri vs. Alexa vs. Google Assistant, Siri is far behind the others in terms of functionality and voice recognition, but it is also the most secure. It seems that there is a direct correlation between privacy and performance. Apple has recently added a similar version of Alexa skills by allowing the user to create the skills themselves. This could keep the user safe but it does require much more effort by the consumer. It comes down to when more data is collected the less privacy you will have but you will receive a better product.

There is no escaping that better technology requires more data to improve. This inevitably leads to privacy violations and trust issues between the user and their voice assistant. There must be a balance; one way could be through an incentive program. Rather than giving up your data to these companies for free and feeling uncomfortable with your voice assistant, you could opt in to recordings rather than having to opt out of them or delete them every time you use your device. Those who understood what it means to provide data of your commands to the analysis teams and were confident in their product could get something extra in return. This would be the best of both worlds; all of the consumers will get an evolving and improving product, and the analysts will be able to provide such a product. The incentive could be as simple as a discount on another device or in the case of Alexa, an Amazon gift card or Prime membership discount. All of the corporations have the opportunity to supply the user with an incentive but they refuse to do so. At least if they did supply the user with an incentive there is an exchange for a product, in this case data, rather than shadily taking it from the consumers and leaving them untrusting and wary of their voice assistant. There is no way the current system of data theft can stay in place. The only way for people to have consistent trust in these devices is for data to be held sacred by all parties and treated with respect.

Voice Assistants are definitely going to stick around considering that they provide so many things that make your life run more efficiently. Consumers will get hooked on their smooth-running lives and then have to choose between giving away personal data or getting rid of their assistant along with their menagerie of assistant controlled devices. I feel that most people will not want to go back after having tasted the ability to arrive home with all the lights on and the garage door open for you. The seemingly meaningless task of turning on the lights when you enter a room will become a chore and make you feel like your living in the dark ages. The corporations in charge of these devices have to be respectful of people’s data and understand that people hold their data and privacy sacred and do not take kindly to it being unknowingly stolen from them.

Works Cited

Chokshi, Niraj. “Is Alexa Listening? Amazon Echo Sent Out Recording of Couple’s Conversation.” The New York Times, The New York Times, 25 May 2018, www.nytimes.com/2018/05/25/business/amazon-alexa-conversation-shared-ec….

Fowler, Geoffrey A. “Amazon’s Alexa Is Coming for Your Microwave, Wall Clock and More.” Roanoke Times, 14 Oct. 2018, www.roanoke.com/business/amazon-s-alexa-is-coming-for-your-microwave-wa….

Kritsonis, Ted. “Privacy Casts Shadow over in-Vehicle Voice Assistants.” Flipboard, The Globe and Mail, 9 Sept. 2019, https://www.flipboard.com/@globeandmail/privacy-casts-shadow-over-in-ve….

Nowak, Peter. “Voice Assistants Only Getting Smarter as Privacy Concerns Grow.” The National, 12 Mar. 2018, https://www.thenational.ae/business/technology/voice-assistants-only-ge…

Olson, Christi. “New Report Tackles Tough Questions on Voice and AI.” Microsoft Advertising, Microsoft, 23 Apr. 2019, https://www.about.ads.microsoft.com/en-us/blog/post/april-2019/new-repo….

Perez, Sarah. “41% Of Voice Assistant Users Have Concerns about Trust and Privacy, Report Finds.” TechCrunch, TechCrunch, 24 Apr. 2019, http://www.techcrunch.com/2019/04/24/41-of-voice-assistant-users-have-c….

—. “Are people satisfied with their digital assistant?” TechCrunch, TechCrunch, 24 Apr. 2019, http://www.techcrunch.com/2019/04/24/41-of-voice-assistant-users-have-c….

—. “What concerns do people have about digital assistants?” TechCrunch, TechCrunch, 24 Apr. 2019, http://www.techcrunch.com/2019/04/24/41-of-voice-assistant-users-have-c….

“Security Research Labs GmbH.” Security Research Labs, 20 Oct. 2019, https://www.srlabs.de/bites/smart-spies/.